The Centre for Internet and Human Rights at Europa University Viadrina in association with Center for Technology and Society at TU Berlin hosted a two day event The Ethics of Algorithms (EOA2015). EOA2015 took place in Berlin on March 9-10, 2015. Day two was an intense debate on The Ethics of Algorithms with input from European and American experts.

This is the first part of my notes aiming to take the debate beyond the local event. EOA2015 has been held under the Chatham House Rule, but keynote speaker Zeynep Tufekci from University of North Carolina, Chapel Hill agreed to have her talk publicly quoted.

Human after All.

Even though the event was dubbed Ethics of Algorithms, we always came back to human ethics. For the time being, it is humans who are writing code and algorithms shaping most areas of our digitalized life. Mrs Tufekci concluded:

"We are so not ready for computer decision-making, because human decision-making is already flawed."

She further argued that "we are empowering machines to make decisions for us where we don't have a yardstick." Since there are no proper models to make objective human decisions, algorithms developed by humans will always have these flaws baked-in. Which is a really good point I think.

However, added Mrs Tufekci, "there are a lot of areas of our life where the computational algorithmic methods will come up with better results."

Automation and Algorithms are taking over most Areas of our Lives.

Mrs Tufekci compiled a comprehensive list of applications where she sees computational decision making unfold its full potential: predictive policing e.g. flagging who might be a terrorist, assessing health care risks or financial risks and of course organizing all sorts of media content in search and social platforms.

This development is a matter of great concern to her.

"The tools that were available have drastically changed, and the amount of available data has drastically changed in the last ten years. (...) This is an information asymmetry that really concerns me."

Typically, either corporations or governments are in a power position here. They need to collect tremendous amounts of data to feed their algorithmic systems. Be it to flag potential terrorists (dragnet investigation) or providing a streamlined media and advertising experience (social network feed).

Chesley Sullenberger vs. the Machine.

"Flying is much safer because of automation."

said Mrs Tufekci. This is true, but at the same time it is also a limited statement.

There are certainly a couple of machines today that may work better than humans- but only in a very specific environment. For example: staying on course in a controlled environment with limited variables e.g. cruising altitude over the Atlantic Ocean, without thunderstorm or landing on a runway without rain or crosswinds.

But machines and their algorithms are having a tough time adapting in new unlearned environments without the time to learn about these situations. During the discussion, the vacuuming robot Roomba popped up.

However, when back in Hamburg, Martin Kleppe, a friend developer said that Roomba was not quite an adequate example in this discussion, because it "does not really learn but rather runs 'brainless' algorithms, not based on the human biology but the animal and insect world". Martin pointed me to two articles explaining more (1,2). In the second one, a Roomba representative is quoted:

"The patterns that we chose and how the algorithm was originally developed was based off of behavior-based algorithms born out of MIT studying animals and how they go about searching areas for food. When you look at how ants and bees go out and they search areas, these kinds of coverage and figuring all of that out comes from that research."

One thing is for sure: you certainly do not want to be on board a plane that is either running on 'brainless' algorithms or on learning mode: how to perform an emergency water landing on the Hudson river.

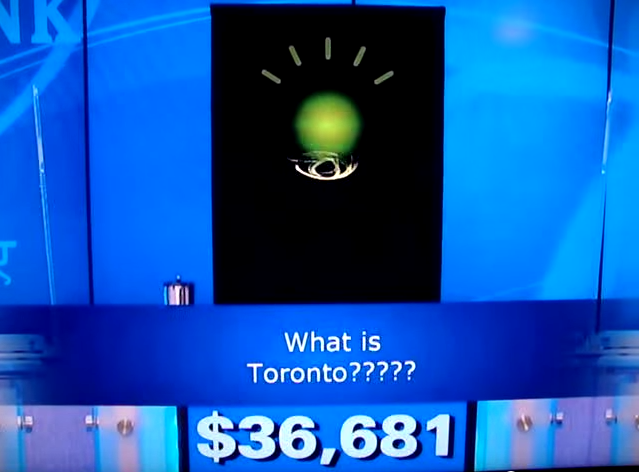

What is Toronto? Machines make different kinds of mistakes.

Mrs Tufekci provided IBM Watson at Jeopardy as an example to point out that machines make mistakes a human, equipped with the appropriate knowledge, would not. Watson came up with the question "What is (Canadian city) Toronto?" in response to a hint that was framed in the category 'U.S. Cities'.

Taking this inaccuracy into account, Mrs Tufekci drew an interesting comparison to the machine based learning approach:

"It's like raising a kid of sorts and not knowing what it is going to do."

Future machines may very well no longer be programmed by rules in the traditional way, they will be able to adapt their behavior based on feedback they collect. Like Roomba- but hopefully equipped with better algorithmic brains: here is a bunch of objects. Try to figure out what they are. And draw your own mental map of the world with them.

Roomba long exposure showing the adaptive pattern applied by Roomba. Image courtesy of signaltheorist.com.

A Black Mirror Effect: the Future is already Here.

It is important to acknowledge that not only companies such as Google are already looking into machine based learning. In 2012, the company devised their mythical Project X research group to build a neural network based on 16,000 computer processors with one billion connections.

Their mission: to build a face detector based on machine learning.

The project team concluded:

“Contrary to what appears to be a widely-held intuition, our experimental results reveal that it is possible to train a face detector without having to label images as containing a face or not."

But it is not only privately held corporations who are interested in this field. Also governments see a lot of potential in it: the European Union has initiated The Human Brain Project including a project target to capitalize its research in software and computing:

"Understanding how the brain computes reliably with unreliable elements, and how different elements of the brain communicate, can provide the key to a completely new category of hardware (Neuromorphic Computing Systems) and to a paradigm shift for computing as a whole. The economic and industrial impact is potentially enormous."

With economical, political and societal relevance of algorithmic and learning-based computation peaking- EOA2015 pointed out that there is in fact action required.

Computational Ethics: It's now or never.

A computer science teacher put the problem in a nutshell:

"You can get through a computer science course until PhD without ever touching humanist topics."

Especially because there are more and more "automated systems all around us- systems we don't understand, systems we cannot control": the matter of ethics in educating current and future developers is an urgent one. It applies not only to developers and computer scientists but also concept developers, strategists, creative technologists- basically anyone working around the creation of algorithm-related computation and its ecosystems. This technology has become ubiquitous and will influence and impact our lives more and more:

"These are things that impact real people's real lives on a constant, daily level."

It is high time to leave an observing standpoint as a society and take on our responsibility as creators, critics and academics.

This leads directly to the debate around Digital Bauhaus held in Weimar, June 2014 and my contribution: Inspiration for a Digital Bauhaus.

The fine folks from Netzpolitik were so kind and made a video of Mrs Tufekci's keynote that you can watch on Youtube: